Upgrading a Kops-created Kubernetes cluster using Terraform

01 Apr 2021 | tags: Kubernetes Kops TerraformRationale TL;DR

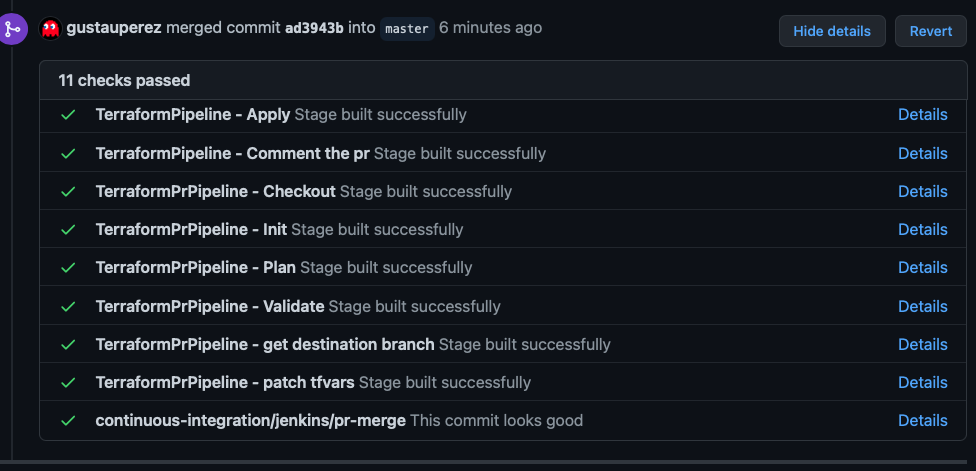

We want to use Terraform with Jenkins to manage the cluster updates. By now, we will focus only in the cluster config.

The solution

First of all, there are a few of things we need to know beforehand:

- Cluster name: that means, the name of the cluster we want to manage. I.e.: my-cluster.

- The state location on S3: we Kops keeps the state of the cluster. I.e.: s3://my-bucket. In this case, the state is in the root of the bucket.

- This one’s optional, the name of the instance groups Kops manages. You can get a list of the ig managed by Kops with:

kops get instancegroups --name my-cluster --state s3://my-bucket

With this info, the first thing to do is to edit the Kops config. Open a terminal a change to the root of the terraform config. There, issue de following command:

kops edit cluster --name my-cluster --state s3://my-bucket

This will open your editor of choice, mine is vim, and load the Kops config. Look for the kubernetesVersion entry:

...

allowContainerRegistry: true

legacy: false

kubeAPIServer:

runtimeConfig:

autoscaling/v2beta1: "true"

kubelet:

anonymousAuth: false

authenticationTokenWebhook: true

authorizationMode: Webhook

kubernetesApiAccess:

- 0.0.0.0/0

kubernetesVersion: 1.18.3

...

In my case I’m running 1.18.3. Change it to the version you want to upgrade and save the yaml file. Once you save the config file (which gets stored to S3, no changes yet) we need to apply those changes to the cluster. In our case, it would mean changing our Terraform config. We would it like this:

kops update cluster \

--name=my-cluster

--state=s3://my-bucket \

--out=. \

--target=terraform

If we are in the right directory, where the previous Terraform was, this will overwrite the Terraform config, usually changing the AMI to use and the launch configuration of the different autoscaling groups (ASG). In my case, 3 different ASG are used (one ASG for the masters, and two used with two different instance groups).

Finally, it’s time to apply the config. Let’s assume that we have all that necessary to run terraform. In this scenario, what you would do would be to first check and review the changes made by terraform with git diff -p. If everything’s ok, then we need to review the changes:

terraform validate && terraform plan

If everything’s ok, we can apply them with:

terraform apply --auto-approve

‘Til here, no rocket science. Terraform would change your ASGs, changing the AMIs to use with the new nodes needed. But it will not change the currently nodes working. So, if you run kubectl get nodes will would see your nodes running the previous version. So now it’s time to upgrade the cluster. To do so, assuming that you run the upgrade on each group of nodes, you need to run something like this:

kops rolling-update cluster my-cluster --state s3://my-bucket --instance-group master-ig --yes

This will upgrade the master ASG. That will evict all the pods in the masters, one by one, kill the node and bring it back with the new version. I strongly suggest to first upgrade the master ASG. If you run just one master, you’d lost connectivity with the cluster for a while. Please bear in mind that

Once the masters are back, you can procced with the remaining instance groups. For example:

kops rolling-update cluster my-cluster --state s3://my-bucket --instance-group elasticsearch --yes

Because I’m running a dedicaded ASG for ES, I prefer need to upgrade it manually. I could in this point, instead, update the remaining instance group by do something like this:

kops rolling-update cluster my-cluster --state s3://my-bucket --yes

However, as I said, I have a dedicated IG running ES. Moreover, you can’t simply upgrade the nodes without stopping the ES cluster, you need to gracefully stop the cluster before stopping the ES nodes. That why I do prefer to upgrade the ES IG manually by specifying the –instance-group elasticsearch.